It seems like an odd time for such a conflict.

The most recent acceleration of advancement in artificial intelligence (AI) technology started only about a year and a half ago.

With so many incredible breakthroughs in the time since, we have so much to be excited about.

Anyone with an internet connection can now benefit from the utility of large language models (LLMs) like ChatGPT, Gemini, Grok, Claude, and many others.

Deep learning has proven to be remarkable in its abilities for pattern recognition, enabling incredibly useful applications in areas like radiology for example, where an AI can outperform a team of human experts.

Grand challenges have even been solved, like accurately predicting how all known proteins fold, empowering the biopharmaceutical industry to accelerate drug development. Or how about developing an AI that can safely “drive” vehicles in just about an environment we would ever drive in, just by using computer vision alone.

Developments are so exciting and tangible, we can see a path towards a much brighter future.

And yet…

An incredible battle is being waged against the open development of artificial intelligence (AI).

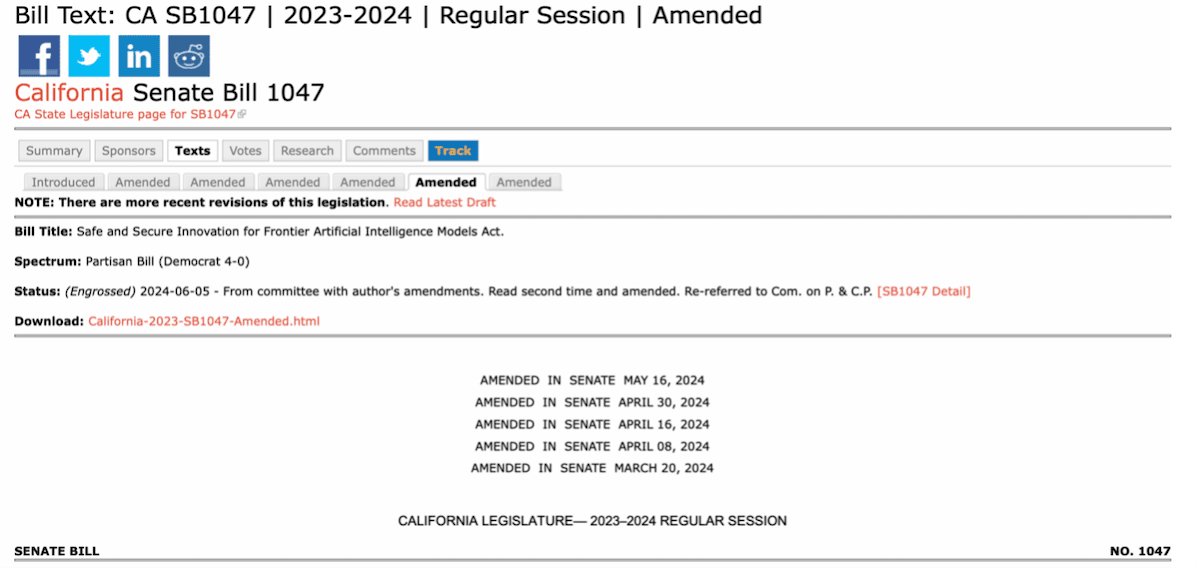

In California, Senate Bill 1047 (SB1047) titled the “Safe and Secure Innovation for Frontier Artificial Intelligence Models Act” passed 32-1 the CA State Senate on May 21st.

A more accurate name for the bill could have been the “Centralized Power and Control of AI Act.”

SB1047 is an insane piece of legislation, especially considering that about 20% of California’s massive economy is from the tech sector.

Why would “they” want to stifle development?

Behind this dangerous piece of legislation is an AI “doomer” organization known as the Center for AI Safety.

Of all things, the majority of the organization’s funding to date came from the most famous “effective altruist,” San Bankman-Fried (SBF), who was recently sentenced to 25 years in prison for one of the largest frauds in history.

Even more ironic was SBF’s estimated $500 million investment in Anthropic, one of the largest and most well-funded AI companies, which would stand to benefit from SB1047 if it’s passed and enacted this August.

The Center for AI Safety, of course, tries hard to position itself as altruistic and acting in our best interests. It puts the risk of AI right up there with nuclear wars and pandemics.

Therefore it sponsored SB1047…

And its game plan has been pretty simple:

Unfortunately, that’s not the worst of it.

The details of the “Safe and Secure Innovation for Frontier Artificial Intelligence Models Act” are a disaster.

First, SB1047, if passed, will create a new CA state regulatory agency — the Frontier Model Division. And it puts the burden of regulatory compliance on the AI developers.

The idea is that this agency will be funded by fees charged to AI developers (totally unnecessary friction and expense).

Next, the bill centers the definition of “covered models.” The “models” of course being AI models.

A covered model, then, is defined as:

The rough idea of the definition above is that any model that has been created with so much computational power that hundreds of millions needed to be spent to create it… is considered a covered model. And will therefore be tightly regulated.

By today’s standards, that means a very limited number of very well-funded public and private AI companies — the kinds of companies that I have written about in Outer Limits, including Microsoft, OpenAI, Anthropic, Alphabet (Google), Meta, X, etc. — will likely meet the definition of a covered model.

What about everyone else?

Smaller companies or organizations have limited choices under this structure. They can apply for an exemption to the regulations, claiming that they do not meet the definitions of the covered model. This would allow them to operate, but not without risk (see below).

Or they can spend an immense amount of time and money trying to remain compliant with the new regulatory AI “regime.”

And if the developers don’t comply with the regulations? There’s risk of a felony and jail time.

Worse yet, are the practical implications of the liability clauses in the regulation.

SB1047’s stipulates that if an organization or team of developers creates software — that is then modified and used for criminal activity — that organization or team of developers can be held liable.

To use a simple example, let’s say that a team developed some open-source software to automatically read and reply to e-mails. Perhaps its software to automate the scheduling of meetings…

Let’s say some bad actors take that software, modify it, and use it as a trojan horse to install malicious software on the receivers’ computing systems. SB1047 states that the people that created the open-source software are liable for the actions of the bad actors and whatever damage they cause.

These regulations are a direct shot at the open-source community, as well as at smaller upstarts building with AI.

Heavy handed regulations like this benefit from complex and expensive regulatory processes, and disadvantage those not equipped to manage the expense and bureaucracy.

The people behind the attack on open-source AI software position their reason as “keeping everyone safe.” If they stifle the open-source community with weaker AI and disincentives, then that software won’t get in the hands of bad actors in the first place. That’s their idea anyway.

How naïve…

Have a look below at what China-based Kuaishou just released. It is a text-to-video generative AI on par with what OpenAI’s Sora model. The prompt used to create the incredible video below was:

A car driving on the road in the evening with a gorgeous sunset and tranquil scenery reflected in the rearview mirror.

It’s just one simple example.

There are hundreds. Powerful AI models have already spread widely to China, Russia, and other places the west might consider adversarial.

But aside from the fact that the powerful AI models and research is already in the wild, the premise of the definition of covered models is mind numbingly ridiculous.

SB1047 seeks to establish a threshold definition, at this point in time (2024), based on models trained on a certain amount of computational horsepower.

Today, this “line in the sand” is only within reach of large well-funded organizations. Put more simply, in order to have a powerful, and perhaps “dangerous” AI today, hundreds of millions need to be spent, perhaps billions. That money is necessary for the computational power required to train a powerful AI.

The idea that a threshold, in a point of time, can be set like this is a fallacy.

It shows that the authors of the legislation are either a) lacking knowledge of how the technology works or b) wrote it this way intentionally, because they know those that will vote for the bill will not understand it. If I had to guess, I’d say it’s (b).

The reality is that those who voted for this radical legislation don’t understand the laws of exponential growth.

This is particularly ironic, considering it’s in California, the home of Silicon Valley — where laws of exponential growth were born.

Moore’s law has exemplified exponential growth. It’s demonstrated, over decades, that the density of transistors on a semiconductor doubles every 18-months. This roughly equates to the speed and performance of CPUs (central processing units) also doubling every two years or so. And it also results in the cost of computation halving in a similar period of time.

Said another way, the costs per unit of compute have declined exponentially over time.

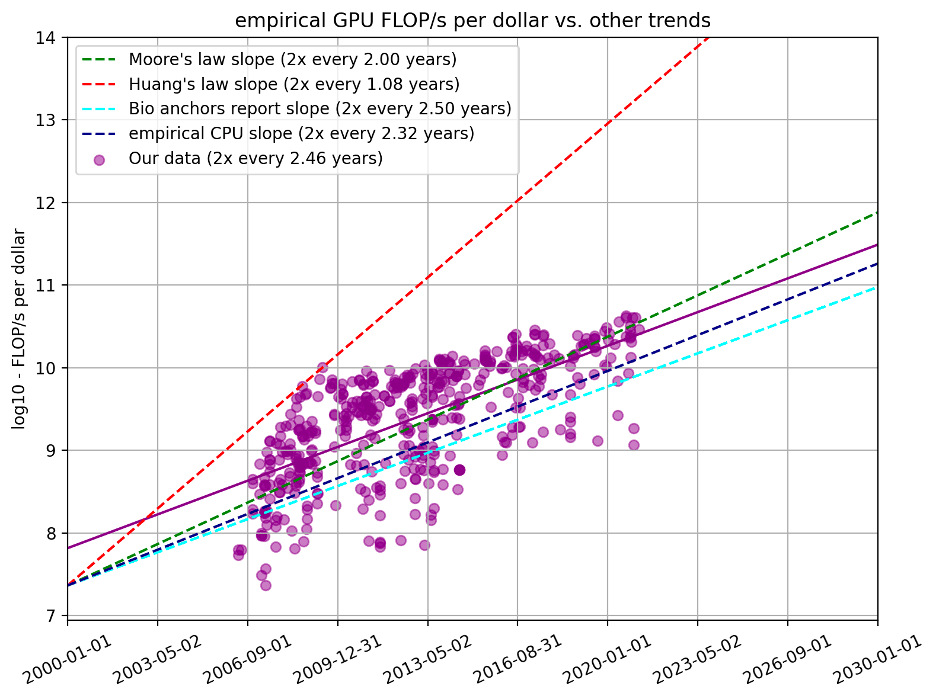

When training and running artificial intelligence, the industry primarily uses GPUs (graphics processing unit). The design of GPUs is different than CPUs, granted, but the exponential laws still apply.

The above chart is an exponential chart. The Y-axis (vertical) is a logarithmic scale, not linear, of the floating operations per second (FLOPS) per dollar. If the chart were linear, we’d see the classic exponential curve, but it wouldn’t fit on the page. [Note: Logarithmic on this chart indicates each unit is 10X the previous unit.]

The chart is useful because it shows us that, on average, we experience twice as much computation power per dollar every 2.5 years.

Said another way, the same level of compute halves every 2.5 years.

For example, a model that costs $100 million today will only cost $50 million in 2.5 years. And five years out, that cost of training will drop to just $25 million.

7.5 years from today, the model only costs $12.5 million, putting in within range of a small tech company. And 10 years later, just $6.25 million.

And all of this assumes that the halving of costs only happens every 2.5 years.

NVIDIA’s incredible progress as of late will likely prove that the halving in GPUs takes place every 12-18 months, resulting in more compressed time frames.

Hopefully, the point is obvious.

A powerful model that is expensive — and only within the reach of large well-funded organizations today — will be in the reach of much smaller organizations a few years from now.

All of this equates to SB1047 resulting in:

Regulations like SB1047 will severely damage innovation and AI development in CA, and the U.S.

It will decelerate the incredible advancements from AI that we’re witnessing right now — those that will lead to saving lives and improving quality of life.

The risk, of course, is that if such awful legislation gets enacted into law in CA, it could very well become a national model given the current political realities.

And that’s something we need to avoid at all costs.

The U.S., with the rest of the Western world, cannot afford to fall behind in AI development.

Adversaries won’t slow down their development. And advanced AI technology is just as critical for defending against AI-empowered adversaries… as it is for economic growth and improvement in quality of life.

We always welcome your feedback. We read every email and address the most common comments and questions in the Friday AMA. Please write to us here.