The race to develop the world’s first artificial general intelligence (AGI) has become panicked.

It’s not a friendly competition with industry leaders, nudging each other forward, knowing that there will be room for several players. It’s far more cutthroat than that.

And the motivations are simple to understand.

There are hundreds of billions — if not trillions — of dollars at stake to a company that becomes the winner.

Greed is a strong motivator, which is why venture capital firms are lining up left and right with seemingly limitless amounts of cash to fund any decent development team focused on artificial intelligence (AI).

The second motivation is ideological.

And the two camps are polar opposites.

On one hand, companies like Alphabet (Google) and OpenAI believe that they have a right to exercise their power and to design an AGI that will have the ability to present information in the way that these companies see fit.

They believe that they should censor and filter information, so that individuals will be presented with the “right” information and in order to keep them “safe,” by using Gemini and GPT-4, along with any future derivatives. This approach has a heavy ideological bias. One that includes rewriting history, censoring peer-reviewed scientific research, and aggressively pushing political agendas.

On the other hand are those that want to present a more neutral view of information, like Anthropic’s Claude 3 Opus. And it’s probably no surprise that Elon Musk and his team at X.AI are working towards an even clearer goal of maximizing the truth with its generative AI development known as Grok.

This battle has become urgent because of the rapid pace of progress towards developing an AGI.

Years ago, most “experts” in the field thought it would be decades from now. Those same people have been radically pulling in their timelines.

Last decade, I predicted that we’d see an AGI by 2028. Even I’m recognizing that I may have been too conservative.

The issue at hand right now is that AI is software. And software can be distributed almost overnight.

Any kind of software service can be hosted in remote data centers, making the service accessible to anyone with a smartphone or a computer on the planet.

And that’s the risk.

The company, or companies, that have access to widespread software distribution channels have an inherent advantage to deploying software quickly. And that means that a dominant standard could be set with AGI in a matter of months, making it nearly impossible for another offering to replace it.

We’ve seen this play out before, which is why this is such a sensitive topic. Alphabet (Google) dominates in search (Google), video search (YouTube), and it controls the majority of the world’s smartphone operating systems (Android OS).

Microsoft dominates the majority of the world’s computer operating systems (Windows), the world’s enterprise productivity software (Microsoft Office and Slack), and the world’s top social media platform for professionals (LinkedIn).

Both companies are heavily biased and use their dominant positions in an effort to influence, censor, ban, and control what we all think. They are part of the Censorship Industrial Complex.

It has become an existential risk to the free thinking and democratic societies around the world that there are so few “in charge.”

And that’s exactly why Elon Musk has filed a lawsuit days ago on February 29 against OpenAI.

For those interested in the details, you can find it in the Superior Court of California filing here.

Given the weight of what is happening right now, and the world’s accelerated push towards artificial general intelligence (AGI), this lawsuit is extremely interesting. And the arguments are easy enough to understand:

Now, if the hair isn’t standing up on the back of your neck right now, it should be…

Musk is one of the smartest people on the planet. And he has already built the world’s most successful AI company — Tesla.

Better yet, he was a founder of OpenAI (the non-profit) and has deep ties into that world.

I’d be willing to bet just about anything that he knows a lot more about OpenAI’s Q* breakthroughs than is publicly known.

Perhaps the most ironic part is that Musk resigned from OpenAI in 2018. At the time he felt that OpenAI was far too behind Google/DeepMind and needed to move faster, otherwise Google would develop AGI and deploy to billions of AndroidOS devices around the world, as well as implement its AGI in Google’s search product.

Musk offered to take over and run OpenAI himself, but the board objected.

But Musk didn’t give up.

He developed deep neural network technology capable of driving a car, truck, or semi. And that same technology is being used to “power” Tesla’s humanoid robot Optimus.

Musk went so far as to build Dojo, one of the world’s most powerful supercomputers, explicitly optimized for training AIs.

And he has already launched Grok, X’s answer to OpenAI’s GPT-4 or Google’s Gemini.

The big difference, of course, being that Musk and his team have designed Grok as a “maximum truth-seeking AI.”

It is designed in the same light as X (Twitter), to protect freedom of speech and not allow the censorship of truths, without any regard for what some might consider political correctness.

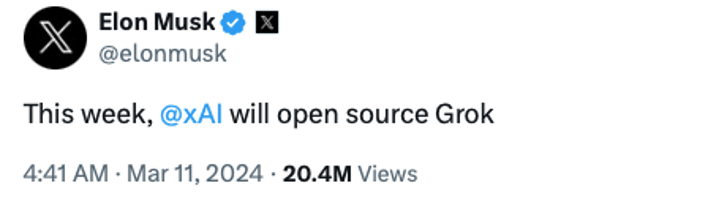

And while this is certainly not a coincidence given the lawsuit, early this morning, Musk dropped a “bomb.”

Musk is pre-empting the other players by open-sourcing his own large language model (LLM). This stands in stark contrast to the proprietary approaches taken by Microsoft, Google, and of course (Not So) OpenAI.

As for the lawsuit, I doubt this is one that Musk can win.

But of course, he knows that.

I can guess what some are thinking right now.

“Yeah right, Jeff, give me a break. Musk just hopes to slow OpenAI down with this lawsuit so he can take the upper hand.”

I’m pretty sure winning this legal battle is not Musk’s goal.

He knows how much is at stake.

Musk isn’t in it for the money. He won’t make any money open-sourcing Grok. He’s in it for the truth.

He spent $44 billion acquiring Twitter (now X) when it was in terrible shape, and deeply corrupted by the same biases that infect Google and OpenAI. It was all done to protect freedom of speech, and to become a platform upon which Musk could launch a truth-seeking AI.

The lawsuit appears to be designed in hopes of scuttling a potential future deal between OpenAI and Microsoft for Q* (Q star), by categorizing Q* as an artificial general intelligence (AGI), which it may very well be (or become).

I also believe that the lawsuit is designed to bring the significance of the OpenAI/Microsoft deal out into the open.

No matter how clever Microsoft has been in designing its “smoke and mirrors” deal with OpenAI, to create the appearance that it doesn’t have control over OpenAI, the reality is that Microsoft does effectively have control.

And when the proceedings of the lawsuit make this point very clear, they’ll bring to light the dangers of a near monopoly (i.e. Microsoft) having control over something as powerful as an AGI.

Let’s hope Musk is successful.

Hi Jeff, concerning your article on Musk versus Sam Altman, I really would like you be more even-handed. Musk knew the company would have to be a for profit. It was not a surprise. You could have put links to additional information. Will Grok be accessible for free for people who don't pay for Twitter? If not, then Grok is for-profit and I do not see the difference been Musk and Altman. What say you? FYI: I am very much up to date on the field of AI, both from the qualitative and the quantitative point of view. — Gordon E.

Hi Gordon,

I’m sure that we’re all missing some of the nuance and inside story on this topic that would probably be useful. But what we do know is that Musk originally committed $100 million of his capital for OpenAI to be a non-profit research organization that would produce open source AI software.

His proposal to take control of OpenAI was largely driven by the recognition that OpenAI wasn’t moving fast enough to catchup with Google’s AI developments. The risk being: If OpenAI didn’t develop and release neutral, unbiased open-source AI software before Google, there was an existential risk that Google would have distribution on the scale of billions of devices of heavily biased, manipulative AI software designed to control how populations think.

The other option Musk proposed was to pull OpenAI back into Tesla, again, so that he could gain control of that project and accelerate development.

While neither of the two things happened, we do know that Musk paid $44 billion to acquire Twitter to protect freedom of speech, he and his team developed its own large language model Grok, and he has announced that X will open source Grok.

X (Twitter) is a for profit organization which generates its revenues primarily through advertising, and also through subscription revenues. With that said, Grok will be open sourced in the coming months.

While we’re still lacking details, I expect that Grok will be free to use as an open source product. That means that other companies or entities can gain the source code to Grok — license free.

Assuming this happens, and I believe that it will, that will confirm that Musk’s original intentions to accelerate development at OpenAI as a non-profit were sincere.

Gordon, let’s track this together and we’ll revisit once we have more details from X concerning Grok in the coming months.

New reader? Welcome to the Outer Limits with Jeff Brown. We encourage you to visit our FAQ, which you can access right here. You may also catch up on past issues right here in the Outer Limits archive.

If you have any questions, comments, or feedback, we always welcome them. We read every email and address the most common threads in the Friday AMA. Please write to us here.