Horsepower.

Raw horsepower.

After watching NVIDIA CEO Jensen Huang’s keynote speech at NVIDIA’s GTC conference on Monday, I couldn’t help but think about it.

While all of the GTC conferences have centered around artificial intelligence since 2015, this year stood out.

It’s all about power this week — computing power… and having the “horses” to accelerate even faster.

AI is about to kick into a new gear… and I’m not exaggerating at all.

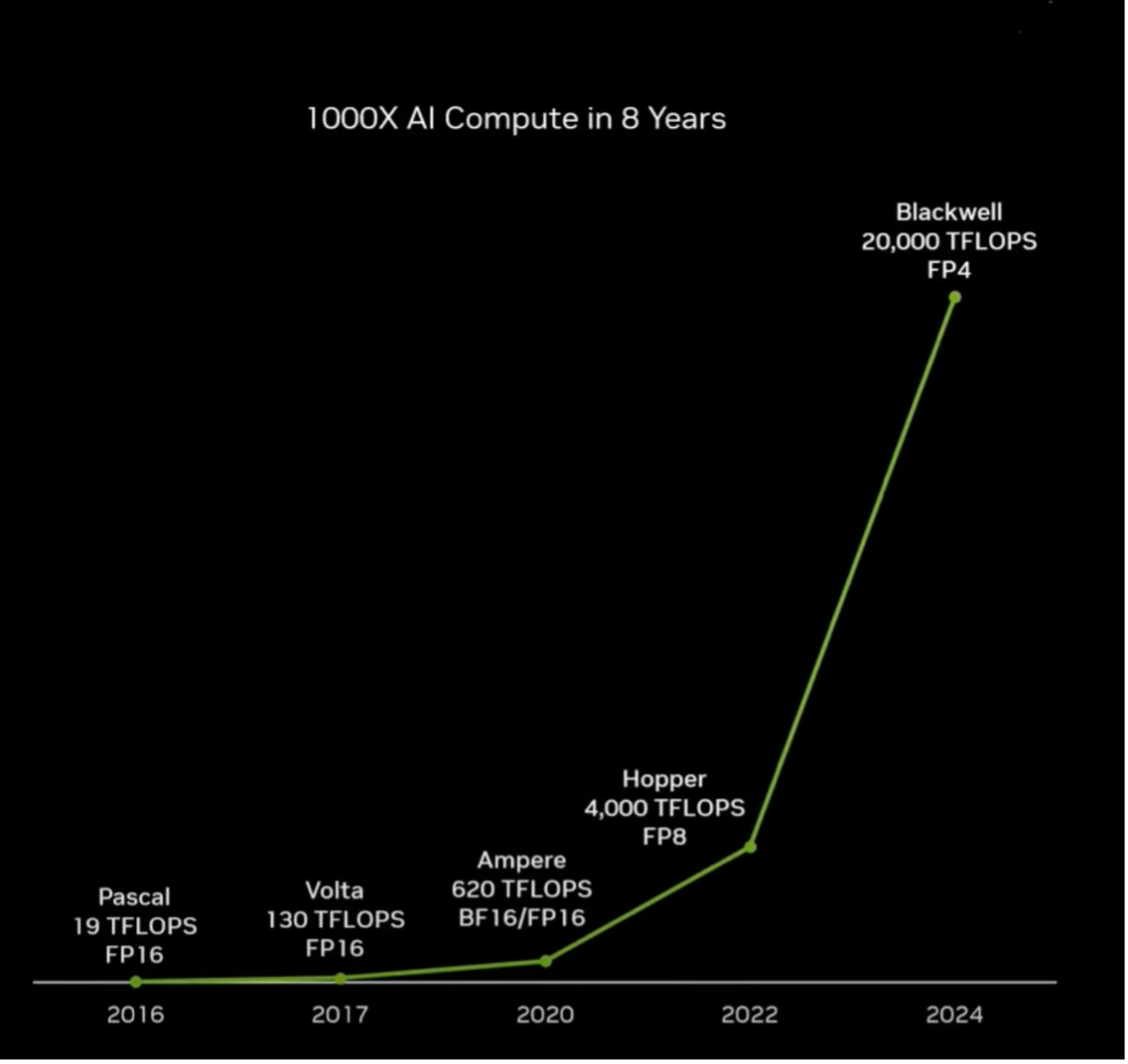

We can see it in one simple chart that Huang shared:

A five-year-old child could look at the above chart and know that something incredible just happened.

It’s impossible to miss the inflection point in the transition from NVIDIA’s current GPU platform — Hopper — to its newly announced GPU platform: Blackwell.

To put things in perspective, Huang pointed out that in the “PC years,” computer processing power increased 100-times over the course of a decade.

And now NVIDIA has demonstrated a 1000X increase in computational power since 2016. That’s just 8 years.

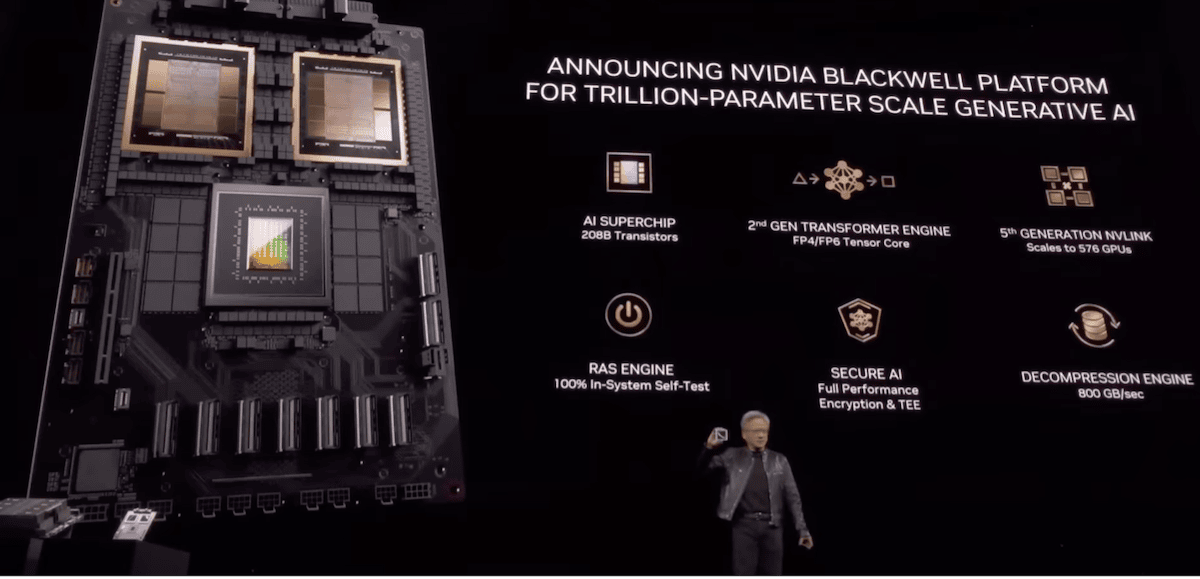

Blackwell was intentionally designed to support the revolution in generative AI.

More specifically, it has been designed to accelerate the training of large language models (LLM).

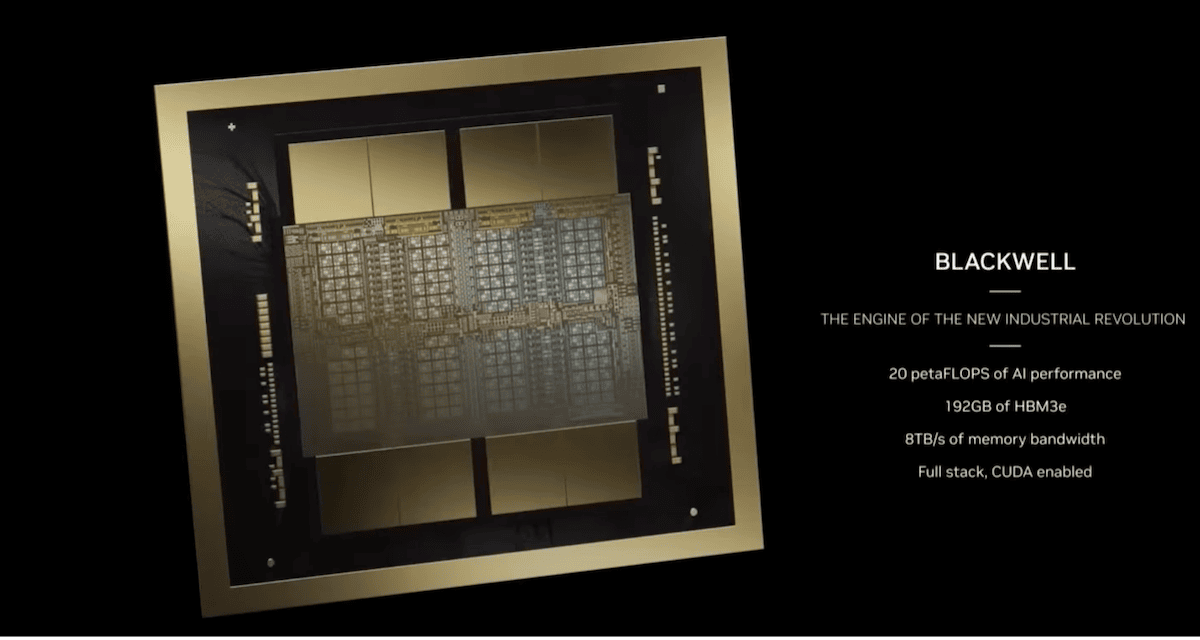

Below is a picture of a Blackwell GPU semiconductor. If we look closely, we can see that it is actually two GPUs (the four blocks on the left and four on the right) that have been interconnected to each other.

The end result is the most powerful GPU (graphics processing unit) that world has ever seen — one with 208 billion transistors capable of operating at 20 petaFLOPS. 1 petaFLOP performs one quadrillion floating point operations per second.

We can think of petaFLOPs as a measure of how fast a processor performs. For example, an Intel-based laptop with an Intel i7 Core processor will operate around 0.2 teraFLOPs. That represents just 0.0002 petaFLOPs. And Blackwell operates at 20 petaFLOPs.

The numbers and performance are mindboggling for a single chip. And it gets better…

NVIDIA pairs Blackwell chips together, with other semiconductors and components, to make a line card (shown below) that slides into a server rack.

Below is what a rack of these line cards looks like from the front and back. It’s about as tall as a refrigerator and about half the width.

Yet despite its size, it’s a supercomputer that could sit in the corner of a room or office.

This is the Blackwell Platform.

What’s extraordinary is that it is capable of operating at 720 petaFLOPs, which is almost an exaFLOP (1,000 petaFLOPs equal an exaFLOP).

The single rack of GPUs above is almost as powerful as the world’s supercomputers in terms of operations per second. And the Blackwell Platform is capable of supporting a throughput of 130 terabytes per second, roughly equivalent to the “aggregate bandwidth of the internet.”

600,000 parts at 3,000 pounds — pure muscle.

What does it all mean?

The answer will probably elicit a combination of excitement… and sheer terror.

To understand the difference, let’s compare the existing Hopper Platform with the newly announced Blackwell Platform.

Training a 1.8 trillion parameter large language model (LLM) on the Hopper platform of 8,000 GPUs will take 90 days and 15 megawatts of electricity. This is about the size of GPT-4 (1.75 trillion parameters), the large language model behind the current version of OpenAI’s ChatGPT.

Training the same 1.8 trillion parameter LLM on the Blackwell platform in the same 90 days can be done with just 2,000 GPUs (down from 8,000 GPUs) and 4 megawatts of power (down from 15 megawatts). Or conversely, the same LLM can be trained in a matter of days with 8,000 Blackwell GPUs.

Training a 1.8 trillion parameter model on Hopper: 8000 GPUs, 15 Megawatts, 90 days.

Training with Blackwell: 2000 GPUs in 90 days, 4 Megawatts of power.

NVIDIA states that the performance increase is up to 30X and the reduction in cost and energy can be up to 25X when compared to the Hopper Platform. These numbers were derived from a like-for-like training set.

Those are marketing figures which represent an ideal scenario, but the magnitude of the improvements is still accurate.

Faster, cheaper, and less energy consumption per unit of computational power.

Does that mean that there will be less energy consumed by data centers around the world?

Absolutely not.

Energy consumption is going to spike.

The Blackwell Platform is so powerful, it will be used to train thousands of AI models… which will be used to automate, predict, and optimize complex problems for governments, corporations, and even individuals around the world.

The existence of Blackwell will be irresistible to the market. It’s so much power, capable of solving very complex challenges.

It will accelerate what is already happening. Blackwell is a step function increase in computational power designed for AI.

To put things in perspective, there is only one confirmed exaFLOP supercomputer in the world. That’s Frontier, which is operated at the Oak Ridge National Laboratory in Tennessee.

Frontier operates at 1.194 exaFLOPs. Next in line is Aurora at the Argonne National Laboratory at “only” 585 petaFLOPs.

That means that the Blackwell Platform, a single rack of GPUs, is more powerful than the supercomputer at Argonne in terms of raw computational power.

The important distinction is that Blackwell is designed for artificial intelligence (AI) applications, and not general purpose computing like Frontier and Aurora.

Where things get a bit unbelievable, and perhaps scary for some, is when NVIDIA lines up 8 of the Blackwell Platform racks — with a few more racks for storage, control, and interconnect and we end up with a beast capable of 11.5 exaFLOPs.

This is the DGX SuperPOD shown below:

It’s hard to imagine what will be possible with a single DGX SuperPOD, arguably the world’s most powerful supercomputer, which is capable of fitting into a basement or even a single room or laboratory.

What this means is even more frequent breakthroughs in AI, and in even shorter periods of time.

We can imagine waves of Mongolian hoards rampaging across the planet, and coming directly our way — these are the companies and governments soon to all be training AI on Blackwell, primarily for good and profit, though some will aim to do harm.

It will be total chaos. And for a period measured in years.

The world simply cannot adjust that quickly. There is so much disruption on the horizon, it will be disorienting for many.

This is a step function. Whether it’s a 10x or 30x performance boost, it doesn’t matter. It’s a magnitude more.

We just jumped a large step closer to AGI, by at least a year. Does that mean 2026 now? Less than 24 months away?

Time will soon tell us. And, as a true E/ACC, I believe we will move even closer — even if it’s through chaos — to meaningful technological progress for our planet and species.

Despite the show of force with the Blackwell Platform announcement, and all the discussion around inference which we explored in Monday’s Outer Limits — The Tech Company that Will Accelerate AI, there was one other critical product announcement from NVIDIA that we should pay close attention to.

Just as NVIDIA created its DRIVE platform for automotive manufacturers back in 2015 to help them gain a step up in integrating autonomous driving technology into their cars, NVIDIA has developed a technology development platform for humanoid robots.

NVIDIA was ahead of the game back in 2015 with its DRIVE platform, and it is catching up with its newly announced Project GR00T (Generalist Robot 00 Technology).

Very few took the robotics team at Tesla seriously two years ago with its ambitions with its humanoid robot Optimus, but now every player in the industry is racing to catch up.

NVIDIA’s GR00T will help those companies accelerate development by building upon the work that NVIDIA has already done.

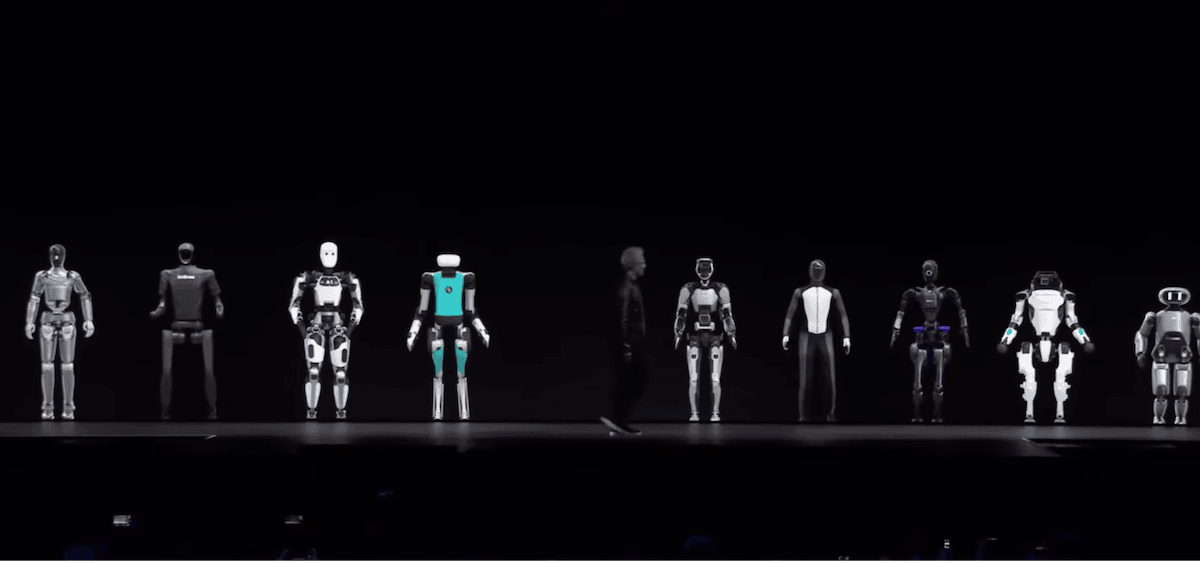

On stage behind Jensen Huang this week stood 9 humanoid robots, seen below. I bet most readers will be able to identify the one just to his left — that’s the Agility Robotics Digit that we’ve been tracking closely.

The one comment from Huang that summed up the purpose of Project GR00T was that “everything that moves will become robotic.”

That’s not too far off from what will become reality.

Whether it’s cars, trucks, vacuum cleaners, pickers in a distribution facility, manufacturing, loaders, and so on, these will all become robotic and autonomous… resulting in a productivity jump unlike anything we’ve seen in history.

So it’s no surprise that NVIDIA built a platform for robotics companies to accelerate this future reality. When they develop on NVIDIA’s hardware and software platforms, they increase the likelihood that those same companies will buy NVIDIA semiconductors for their future robots.

This is a smart business strategy that will not only benefit NVIDIA but will accelerate the development of robotics for just about any application we can imagine.

As a reminder, these GPUs are general purpose — the workhorses of AI.

NVIDIA’s success only creates opportunity for application-specific semiconductor companies like Groq, which specializes in inference, as we discussed in Outer Limits — The Tech Company that Will Accelerate AI.

Yes, the news out of NVIDIA this week is very bullish for NVIDIA in the long term. But as a reminder, the company is trading right now at 36-times its annual sales (not profits).

Despite the current momentum in NVIDIA’s stock, it remains seriously overvalued, so those trading on momentum should keep tight stops for a stock like this.

New reader? Welcome to the Outer Limits with Jeff Brown. We encourage you to visit our FAQ. You may also catch up on past issues in the Outer Limits archive.

If you have any questions, comments, or feedback, we always welcome them. We read every email and address the most common threads in the Friday AMA. Please contact us.