It was one of the best things I could have ever done.

Living and working as a technology executive in Asia — and for as long as I did — did wonders for my personal and professional development.

Being removed for an extended period of time (years) from our home country gives us the space to see things through a different lens, including where we came from.

Having moved to Japan in the second half of the ’90s, it was remarkable to see how ahead Japan’s technological adoption was compared to the rest of the world. The maturity of Japan’s wireless networks and wide range of mobile devices was extraordinary at that time.

And not only could I see the emerging technology as a consumer, I spent my days meeting with technology and communications companies throughout the region, allowing me to see behind the curtain and understand what was coming.

In the technology-heavy markets of Japan, S. Korea, Singapore, Taiwan, and mainland China, the hustle and activity has always been nonstop. The intellectual property and product design may come from the Western markets, but this is where the products are made.

And last night, in Taipei, the second largest consumer electronics show in the world after CES — Computex — kicked off with a keynote speech from NVIDIA CEO Jensen Huang.

NVIDIA’s presence at Computex each year tends to build upon announcements made at its annual GTC technology conference, held in the U.S. every spring.

At Computex, NVIDIA usually focuses its energy on its local Taiwanese business partners that manufacture its semiconductors and use those semiconductors for electronics products.

But this year was different. Huang did something unexpected.

He announced the next, next generation GPU platform, even before the next generation GPU has shipped.

The Hopper architecture is what’s shipping now. Blackwell is what will start shipping in the second half of this year. (We covered Huang and NVIDIA’s launch of the Blackwell architecture back in March right here in Outer Limits — “Everything That Moves Will Become Robotic”.)

And Rubin, the new architecture announced for production in 2026, is the next-next architecture.

Rubin is named after Vera Rubin, whose work provided evidence of the existence of dark matter in our universe.

There wasn’t much shared in terms of details regarding the new Rubin architecture, but that wasn’t really the point.

For a company that has become the most valuable semiconductor company in the world — a company that could just coast and let the business come to it — NVIDIA wants nothing of the sort.

It’s not resting. It’s speeding up.

And NVIDIA sets the pace for the entire industry.

It’s now pushing a new architecture release cadence of once a year. This would be nothing but a mirage for a company like Intel. But for NVIDIA, it has become like clockwork.

And there’s one thing that we can be certain of regarding the Rubin platform, it will be more powerful and more efficient than Blackwell, just as every new architecture is compared to its predecessor.

There was some odd messaging this year, though, that just didn’t make much sense.

The messaging was summed up in something Huang said:

“accelerated computing is sustainable computing”

Odd. Vague…

Huang was referring to how the combination of GPUs (these are the graphics processors for AI) and CPUs (these are the central processors for controlling a server with GPUs) — with the new architecture — could speed things up by up to 100x… while only increasing power consumption by 3x.

The punchline was 25x more performance per Watt over just CPUs alone.

Huang went so far as to say:

“The more you buy, the more you save”

I couldn’t help but laugh. I’ve heard that one before…

He was of course referring to the energy savings. The real nuance here is that with every generation of semiconductor architecture, the performance per Watt increases. That’s another way of saying that we use less energy for one unit of computing performance.

And while this is technically true, the reality is that with each new powerful, more efficient GPU architecture, our energy consumption increases… a lot.

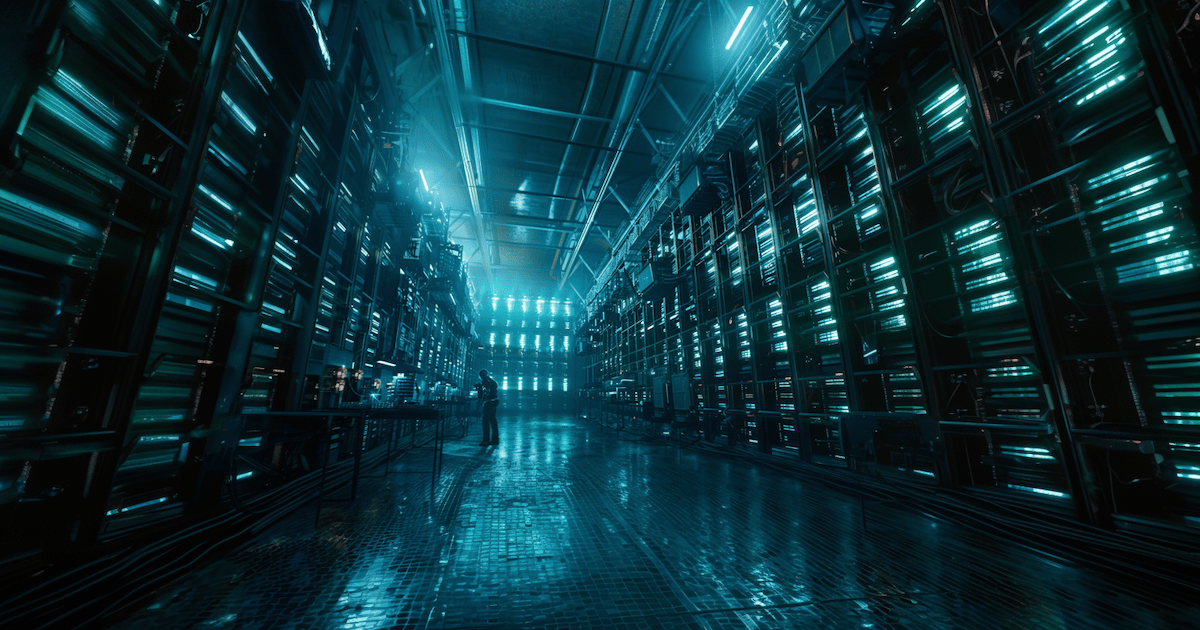

By way of example, just before the generative artificial intelligence (gen AI) boom kicked off in 2023, global data center electricity requirements took up about 1.17% of the global electricity supply.

At the current pace of development and adoption of these advanced computing systems, that number will nearly double to about 2.2% by 2026, the year that NVIDIA’s Rubin will start shipping.

And by 2030, electricity consumption by the world’s data centers will have skyrocketed by more than 4x, to somewhere between 4.5-5.5% of the world’s electricity supplies.

The obvious point, of course, is that this yearly cadence of new (power-efficient) semiconductor architectures isn’t saving energy at all, contrary to Huang’s claims…

It’s increasing the use of electricity. Because through the acceleration of adoption of AI, the world’s electricity requirements are increasing dramatically.

To add fuel to the trend, NVIDIA also announced a new software initiative called NVIDIA Inference Microservices (NVIDIA NIM)…

The technical nomenclature isn’t important, but what it means is.

What NVIDIA released to software developers is a number of software “containers” that are optimized to run on NVIDIA’s hardware (its GPUs). The easiest way to think of a container is as a preprogrammed module of software that is ready to run in specific environments.

That’s what these microservices are. NVIDIA did the heavy lifting with software programming, modularization, and optimization so that developers don’t have to do that work. They just plug into NVIDIA’s software module and run on NVIDIA hardware.

Naturally, the focus of these containers is all about artificial intelligence (AI). For example, a software developer that has created a software service that uses a large language model (LLM) like Anthropic’s Claude can just use NVIDIA’s container with the Claude AI and run more efficiently on NVIDIA hardware. There’s no longer any need to spend weeks trying to optimize.

Put simply, the release of NVIDIA’s inference microservices will make it simpler for companies to use AI. This will accelerate adoption of AI software further…

And yes, that means far more electricity will be required. But it doesn’t stop there…

We will need so many more resources to feed the AI demand that’s growing exponentially now…

More copper, lithium, rare earth metals…

We will need more fiber optic cables and, of course, more semiconductors.

We will need so much more than most of us realize.

We always welcome your feedback. We read every email and address the most common comments and questions in the Friday AMA. Please write to us here.